This is part of a series of posts revolving around user interface design and development, the introduction and links to the other posts can be found here.

Last I wrote about user interfaces I discussed the new Unity UI system and I wrote about our process of porting from Daikon Forge to it. That was a year and a half ago and a lot has changed since then. To keep things interesting we decided to move from Unity UI (yet another move?!) to Coherent UI and I’ll explain why we did it.

Why Move… Again?!

Changing UI library is no small task and it’s definitely not something to be undertaken lightly, especially twice in the same project. So… why did we move? Ultimately it came down to two main points.

We found Unity’s UI was not up to standard at the time

When Unity UI came out I started porting our mod tools over to it. Whilst doing this I encountered a lack of functionality and lots of bugs. The framework was far from mature and lacked a lot of features and functionality you would come to expect from a UI middleware. Unity open sourced it, which was a great move, but even today there is functionality and features missing and the workflow just didn’t fit what we wanted.

We needed a mod friendly UI system

This is an especially important point. As you may know by now flexible mod support is one of our core design pillars for Solitude and Unity UI just isn’t mod friendly one bit. It’s heavily Unity Editor based and, while you can set up the UI at runtime, it takes a lot of code to achieve simple, reliable results. We needed something that modders could easily edit, play around with and get into the game. The only way we could manage that would be for us to write a layout tool as part of the game mod tools and a converter for changing this custom layout format into Unity UI. To be blunt – that wasn’t going to happen. We’re too busy with critical features as it is so having to write a UI converter would be too much work for us.

So I decided to expand our search which lead to me find Coherent UI.

Coherent UI

Coherent UI is a user interface middleware developed by Coherent Labs. It integrates a wrapped chromium renderer (think: Chrome web browser) to provide HTML, CSS and Javascript support for user interfaces. I took to my research and after a few example projects I quickly realised how perfect for Solitude this would be. This was in January 2015.

Coherent provided loading of UI in the form of HTML, CSS, Javascript, the bindings and hooks for linking the renderer into Unity but it didn’t provide any kind of complex framework for controlling all these. To keep inline with our mod support design pillar we needed a flexible system for modders to define user interface components that can be loaded and removed from the game. It took us a few weeks of solid development effort but after we had finished we had a framework that allowed us to create user interface components and bind them to the game. Not just that! We were able to separate the UI logic from the components so the UI logic stayed in the Lua mod scripting layer and the pure view stayed in the JavaScript code.

This framework proved to be very flexible and allows modders to inject their UI mods into the core Solitude game, or provide UI functionality for more extensive mods they create all in a well known format. It even allows for real web browsing! (We’re limiting that for the core game but modders are welcome to unlock it with a simple change).

The downside to Coherent UI was that it is closed source and is expensive for a small company of our size. For a larger company it’s very reasonable in cost. At the time we took the subscription approach with the intention of upgrading to a full game license when we had the funds for it.

So, what else could we do with Coherent? Well… it allowed us to truly get the most out of Solitude’s terminal system. Solitude Tech Demo 1 had terminals but they were more faked as they weren’t sync’ed up for multiplayer support and they were definitely not moddable. So with Coherent it allowed us to get to our Terminals 2.0!

As we were pushing Coherent hard we started to feel some performance issues in two mains areas. We plan to have a lot of these terminals in one area at any one time and we intended to show in-game video feeds (view screens) on them too. Both of these areas were causing us performance issues and were a cause for concern. Coupled with that, Coherent informed us that the subscription tier was to be end-of-life’ed and, after the time extension they offered us, we would have to upgrade. Since a time extension of a year wouldn’t cover us for the release of Solitude we decided to upgrade with the subscriber discount they provided.

Coherent GT

During this time Coherent suggested we try their new version of Coherent called Coherent GT. This apparently brought with it a lot of performance improvements so I spent some time investigating it. I was very happy with what I found as it solved both performance issues that were a concern for me only a few weeks earlier! With that, we fully upgraded to Coherent GT! This allowed us to fully implement viewscreens in Solitude.

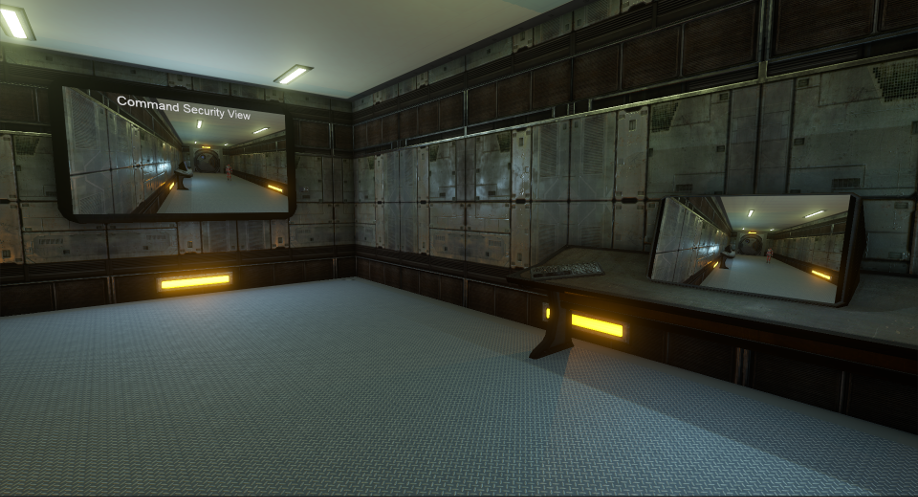

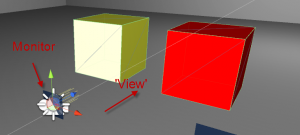

The terminal viewscreen feed is fully embedded in the terminal HTML (DOM) structure, so you can easily manipulate it with JavaScript and overlay any user interface on top of it like you usually would on the terminals. Here is a very basic example of an in-game camera module on the wall (it’s to the right of the large viewscreen) with the view being shared between two terminals with the viewscreen feed on it and a basic overlay.

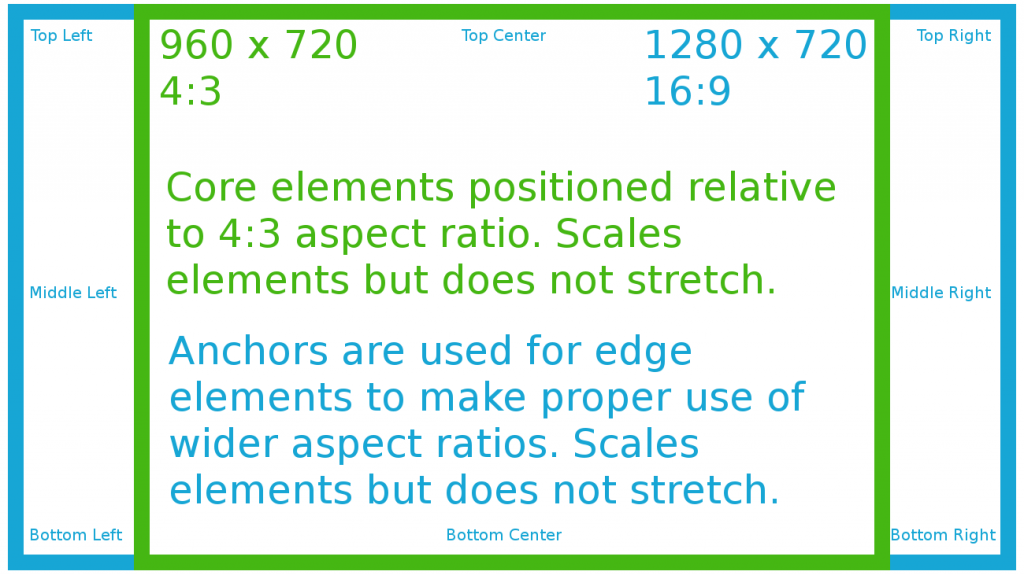

So there we have it. We’re not changing UI system again and Coherent is, without a doubt, the best fit for us. We get to use a technology that mastered scaling and aspect ratios long ago (web development), access to all the Javascript libraries that exist, a fast and multi-core UI renderer and a system that is fully moddable. Sounds like a win to me.

I’ll make another post to go into the terminal system in more detail as there’s a lot of things going on there. Hopefully you found this interesting and, like usual, comment, email or grab me on Twitter at @CWolf.

Thanks for reading!