In the spare time between our contract work I’ve been developing technology for our future game. One piece of tech that we feel will feature heavily in the game is interactive monitors and consoles. While going through my thoughts I immediately remembered the strong impression that was made on me when I first played Doom 3 and came across their interactive monitors. I loved how they integrated more functionality into an area of games that was traditionally just an ‘on/off’ switch-style game entity.

I wanted to create something simple and effective similar to what Doom 3 achieved.

So, with the decision to do some tech prototyping I went about wanting the monitors to be as flexible as possible. It’s still early days but I intend to have lots of different monitor types supported. In my eyes the system should support quick access, full focus in-environment and total immersion monitors. So, here are the initial set of types I am dwelling over:

Environment Monitors – The player’s view is zoomed in and locked onto it. It will still show part of the environment around it.

Fullscreen Monitors – Monitors that go into full screen mode.

Security Cams – Full screen, limited movement angles and possibly screen effects

Avatar Monitors – Full screen, full movement control (for turrets or robots/probes)

So… with those types in mind I went to create a prototype. You can see my efforts below in the Youtube video.

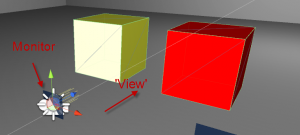

As you can see, it’s very early days but this proves the concept pretty well. Now… how is this implemented? As we’re using Unity Pro this is where a Pro specific feature steps in – RenderTexture. It allows a camera to render, or draw, its view to a texture. This texture then can be used on, for example, a Plane. By setting up a scene where the cubes can be viewed; the Plane can show this. By doing some raycasting on the Plane, then continuing the raycast from the intended camera – you can detect hits on the objects and then trigger the interaction.

In general this approach works well but I still have a few design choices to chew on. Firstly, while the camera and RenderTexture approach works really well for ‘real’ views such as Avatar or Security camera mode, since it’s looking at something in the ‘world’, it gets a little cloudy for the monitors and consoles. Where should the ‘view’ exist in the game scene for a monitor with just buttons / user interface options / typical computer interfaces and feedback? The two main trains of thought on this type of thing are:

This keeps the ‘view’ positionally relevant to the monitor and is easy to remember where the view is. The problem is that it feels messy to me. You can enable / disable layers in Unity so it doesn’t need to be messy but it just feels a little wrong to me, however, the ‘view’ in-game will be culled so you won’t ever see the actual ‘view’ – just the result on the monitor.

Very far away from the active game world

This keeps the ‘views’ away from any active area but our game will make use of a very large in-scene area. While our game will use a very large in-scene area, ultimately, there will always be space for it somewhere far out. We’ll be loading world data in and out as the player(s) move. I’m not too comfortable with this approach though as the ‘views’ will be a little dislocated from the monitor – it feels wrong to me too.

The game scene is the building area of the game. The active area is what we would load game objects into. This leaves some ‘no mans land’ which we could add the monitor ‘views’ to.

I’ll have to give this issue a good bit of thought. I’m going to see if there are other options available to me that I’ve not thought up yet. I’m more inclined to go with the first option but we’ll see. One very interesting side effect I encountered has been getting an effect similar to Portal’s portals. By leaving a mouse controller active on the security camera monitor when it should be turned off gives a very similar effect to what it’s like to look through a portal.

We’ll see how the tech progresses – thanks for reading!